Docker Container Security: Challenges and Best Practices

Table of Contents

The containerization of software and applications continues to escalate, and although alternatives have emerged to challenge Docker, it continues to enjoy major adoption by developers for building and sharing software and apps. In 2022, Docker estimated that 44% of developers are using some form of continuous integration and development with Docker containers. This trend of sustained growth means that container security remains a critical consideration for organizations that use containers for development or production. Given that containers are more complex in many respects than virtual machines and other deployment technologies that were widely used before Docker, learning how to secure Docker containers can be complex.

In this article, we offer an overview of Docker, how it works, why securing Docker containers is challenging, and which best practices and tools you should adopt when monitoring your containers for security.

What is Docker?

Docker is an open platform used to develop, ship and run applications. It packages software into containers — standardized, executable components that have everything your software needs to run including libraries, system tools, code, and runtime. These containers combine application source code with the operating system libraries and dependencies required to run that code.

Docker makes containerization faster, easier and safer. It enables developers to ship, test and deploy code quickly and scale applications into any environment with confidence that your code will run.

Docker was created to work on the Linux platform, but also offers support for other operating systems, including Microsoft Windows and Apple operating systems. You can also use versions of Docker for Amazon Web Services (AWS) and Microsoft Azure .

In 2022, over 13 million developers used Docker.

How Docker works

Docker is an operating system for containers that provides a standard way to run your code. Containers virtualize the operating system of a server, and Docker is installed on each server to provide simple commands you can use to build, start, or stop containers.

Docker enables you to package and run an application in a container. The container packages the application’s service or function with all of the libraries, configuration files, dependencies and other parts needed for it to work. Each container shares the services of one underlying operating system. Docker uses resource isolation in the operating system kernel to run multiple containers on the same operating system.

You can run many containers simultaneously on a given host. As containers hold everything needed to run the application, you don’t need to rely on what is currently installed on the host. You can share containers while you work and know that everyone uses the same container that operates in the same way. With Docker, you can significantly reduce the delay between writing code and running it in production.

When you use Docker, you create and use images, containers, networks, volumes, plugins, and other objects. Docker images contain all the dependencies needed to execute code inside a container, so containers that move between Docker environments with the same operating system work with no changes.

An image is a read-only template with instructions for creating a Docker container. An image can be based on another image, with some additional customization. You can create your own images with a Dockerfile or use those created by others and published in a registry.

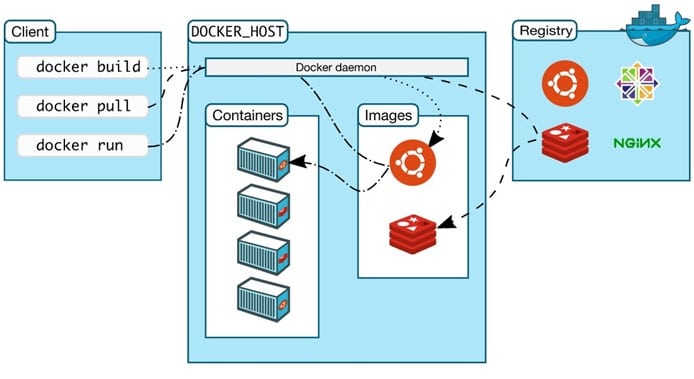

As Docker itself explains, Docker uses a client-server architecture. The Docker client (docker) is the main way that most Docker users interact with Docker. It sends out commands to the Docker daemon using the Docker API, a REST API, over UNIX sockets or a network interface. The daemon, (dockerd) listens for Docker API requests and manages Docker objects such as images, containers, networks, and volumes. A daemon can also communicate with other daemons to manage Docker services. It builds, runs and distributes Docker containers. The Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker client can communicate with more than one daemon.

Docker registries store Docker images. The primary example is Docker Hub. This is a public registry, and Docker is configured to look for images there by default. You can also run private registries. When you use the docker pull or docker run commands, the required images are pulled from your configured registry. When you use the docker push command, your image is pushed to your configured registry.

A container is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI. You can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state. You can control how isolated a container’s network, storage, or other underlying subsystems are from other containers or from the host machine.

You can install the Docker Desktop application for Mac, Windows or Linux so that you can easily use these environments to build and share containerized applications and microservices. The Desktop includes the daemon (dockerd) and the client, plus other Docker tools such as Docker Compose, Docker Content Trust, Kubernetes, and Credential Helper.

Why is Docker container security such a challenge?

Before Docker, most organizations used virtual machines or bare-metal servers to host applications. From a security perspective, these technologies are relatively simple. You need to focus on just two layers (the host environment and the application) when hardening your deployment and monitoring for security-relevant events. You also typically do not need to worry much about APIs, overlay networks or complex software-defined storage configurations, because these are not usually a major part of virtual-machine or bare-metal deployments.

Docker container security is more complicated, largely because a typical Docker environment has many more moving parts that should be protected. Those parts include:

- Your containers. You probably have multiple Docker container images, each hosting individual microservices. You probably also have multiple instances of each image running at a given time. Each of those images and instances needs to be secured and monitored separately.

- The Docker daemon, which needs to be secured to keep the containers it hosts safe.

- The host server, which could be bare metal or a virtual machine.

- If you host your containers in the cloud using a service like ECS, that is another layer to secure.

- Overlay networks and APIs that facilitate communication between containers.

- Data volumes or other storage systems that exist externally from your containers.

So, if you feel like learning how to secure Docker containers is tough, you’re right. Docker security is more complicated than other security strategies.

What best practices and tools can improve Docker container security?

Fortunately, that challenge can be overcome. While this article doesn’t profess to be an exhaustive guide to Docker security the following are some best practices and types of tools that can help you.

#1 Set resource quotas

One handy thing that Docker makes easy is to configure resource quotas on a per-container basis. Resource quotas allow you to limit the amount of memory and CPU resources that a container can consume.

This feature is useful for several reasons. It can help to keep your Docker environment efficient and prevent one container or application from hogging system resources. But it also enhances security by preventing a compromised container from consuming a large number of resources in order to disrupt service or perform malicious activities.

Resource quotas are easy to set using command-line flags. For full details, see the Docker documentation.

#2 Don’t run as root

We’ve all been there: You are tired and you don’t want to fight with permission settings in order to get an application to work properly, so you just run it as root so that you don’t have to worry about permission restrictions.

That might be OK to do in a Docker testing environment if you’re learning how to use Docker for the first time, but in production, there is almost never a good reason to let a Docker container run with root permissions.

This is an easy Docker security best practice to follow because Docker doesn’t run containers as root by default. So, typically, there is nothing you have to change in a default configuration to prevent running as root. However, you do have to resist the temptation to let a container run as root simply because it’s more convenient in some situations.

For added Docker security, if you use Kubernetes to orchestrate your containers, you can explicitly prevent containers from starting as root (even if an admin attempts to start one manually) using the MustRunAsNonRoot directive in a pod security policy.

#3 Secure your container registries

Container registries are part of the reason Docker is so powerful. They make it easy to set up a central repository from which you can download container images with a few keystrokes.

However, the ease and convenience of container registries can become a security risk if you fail to evaluate the security context of the registry you’re using. Ideally, you’ll use a registry such as Docker Trusted Registry that can be installed behind your own firewall in order to mitigate the risk of breaches from the Internet.

And even if the registry is accessible only from behind the firewall, you should also resist the temptation to let anyone upload or download images from your registry at will. Instead, use role-based access control to define explicitly who can access what, and blacklist access from everyone else. Although it can be tempting to leave your registry accessible by anyone to simplify access and avoid having to configure new roles when someone new needs access, this inconvenience is worth it if it prevents a breach in your registry.

#4 Use trusted, secure images

Speaking of registries, you should also be sure that the container images you pull come from a trusted source. This may seem overly obvious, but given that there are so many publicly available container images that can be downloaded quickly, it can be easy to pull an image accidentally from a source that is not verified or trusted.

For this reason, you should consider blacklisting public container registries other than official trusted repositories, such as those on Docker Hub.

You can also take advantage of image scanning tools to help identify some known vulnerabilities within Docker images. Most enterprise-level container registries have built-in scanning tools. Some of them, like Clair, can be used separately from a registry to scan individual images, too.

#5 Identify the source of your code

Keep in mind that Docker images typically contain a mixture of original code and packages from upstream sources. So, even if the specific image you download comes from a trusted registry, the image could incorporate packages from other sources that may be less trustworthy. To make matters even more complicated, those packages could themselves be composed of code drawn from multiple sources, including third-party open source repositories, although the origins of the code may not always be clear from looking at the package itself.

In this context, source code analysis tools are useful. By downloading the sources of all packages in your Docker images and scanning them to identify where the code originated, you can determine whether any of the code incorporated into your container images contains known security vulnerabilities. As an added benefit, source code analysis also helps you remain compliant with licensing requirements involving third-party code, which could affect you even if the packages you use don’t mention other licenses. A tool like Mend Container continuously detects vulnerabilities and manages licenses, from early development all the way to production. It provides automated policy enforcement, real-time alerts and enables continuous integration, and keeps your open source components secure and compliant throughout the development lifecycle from inside your containerized environments.

#6 API and network security

As noted above, Docker containers typically rely heavily on APIs and networks to communicate with each other. That’s why it’s essential to make sure that your APIs and network architectures are designed securely, and that you monitor the APIs and network activity for anomalies that could indicate an intrusion.

Since APIs and networks are not a part of Docker itself but are instead resources that you use in conjunction with Docker, steps for securing APIs and networks are beyond the scope of this article. However, the core message here is that API and network security are particularly important when you use Docker, so they shouldn’t be neglected.

Conclusion

Docker is a complicated beast, and there is no simple trick you can use to maintain Docker container security. Instead, you have to think holistically about ways to secure your Docker containers, and harden your container environment at multiple levels. Doing so is the only way to ensure that you can reap all the benefits of Docker containers without leaving yourself at risk of major security problems.

FAQs

What is a Docker container?

Docker itself defines a container as a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries, and settings. Container images become containers at runtime and in the case of Docker containers – images become containers when they run on the Docker Engine.

What is a Docker network?

A network enables communication between processes. A Docker network is mainly used to enable communication between Docker containers and to non-Docker workloads via the host machine where the Docker daemon is running.

What are the risks of using Docker?

The main security risks of using Docker are:

Unrestricted traffic. Some versions of Docker allow all network traffic on the same host by default. This can expose data to the wrong containers.

Vulnerable and malicious container images. As the Docker Hub is open to everyone, so untrustworthy publishers could publish unstable or corrupted versions of common images in the Docker Hub registry.

Unrestricted access. It’s possible for attackers to access numerous containers once they’ve infiltrated the host via the system file directory.

Vulnerable host kernel. The kernel is exposed to the host and all containers, so if a container causes a security problem in a vulnerable kernel, it could compromise the entire host.

Container breakouts. This takes place if malicious actors access the host or other containers from a compromised container. If a process breaks out of a container, it maintains container host privileges. Furthermore, a single compromised container can lead to other containers being compromised.

Compliance. This can be challenging to monitor and enforce, owing to the fast-moving nature of container environments.