How Can Application Security Cope With The Challenges Posed by AI?

Table of Contents

This is the third part of a blog series on AI-powered application security. Following the first two parts that presented concerns associated with AI technology, this part covers suggested approaches to cope with AI concerns and challenges.

In my previous blog posts, I presented major implications of AI use on application security, and examined why a new approach to application security may be required to cope with these challenges. This blog explores select application security strategies and approaches to address the security challenges posed by AI.

Calling for a paradigm shift in application security

Comprehensive assessment of an application’s security posture involves analyzing the application to detect pertinent vulnerabilities. This entails, among other things, knowledge of code provenance. Knowing the origin of your code can expedite the remediation vulnerablities, and facilitate effective incident response by helping an organization to infer who is likely to be responsible for code maintenance and vulnerability fixes.

Historically, as computer-generated data exploded in volume, the industry was compelled to form new concepts and think in new terms such as “big data”. AI galvanizes a similar shift in thinking due to the relative ease by which AI power can be abused by malicious actors, and the potential extent of such an abuse. With AI-based machine-generated code, it is conceivable that in the near future most of the detected software security vulnerabilities calling for attention are more likely to be associated with a non-human author.

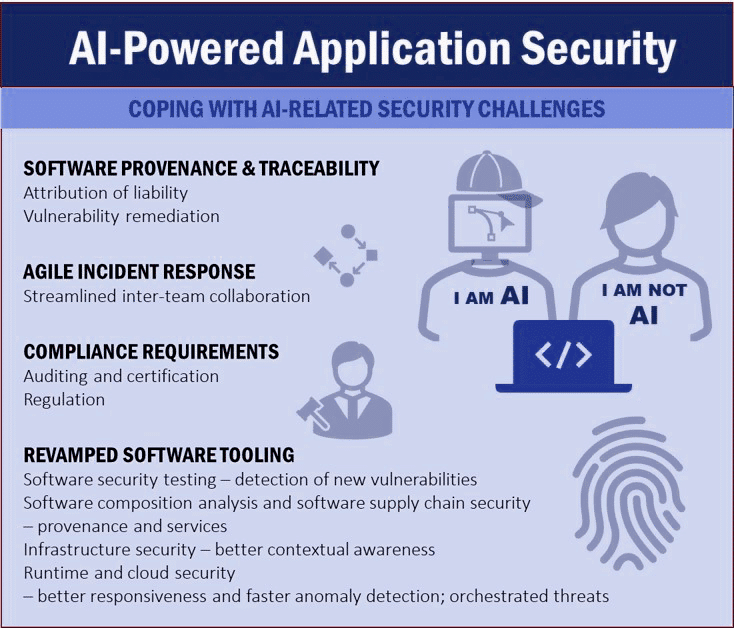

AI doesn’t just underscore the significance of quick pattern and anomaly detection. It excels in such areas. What’s more, it can significantly alleviate the challenge faced by organizations struggling to discern true security vulnerabilities among the noisy results often produced by non-AI solutions. Application security tools should correspondingly be updated or even re-designed to cope effectively with such a challenge.

What can the AppSec industry do to accommodate AI security challenges?

The potential scope of AI-related risk necessitates a significant change to application security tools. Vulnerability management (VM) and attack surface management (ASM) solutions will need to factor in new types of threats and revise asset risk assessments, Application security testing (AST) and software composition analysis (SCA) solutions will need to detect and handle AI-related software vulnerabilities update risk scoring, and enhance prioritization and remediation. Production-focused security solutions (e.g., cloud-native application protection platforms – CNAPP, cloud security posture management – CSPM, cloud workload protection platform – CWPP, security information and event management – SIEM, security orchestration, automation and response – SOAR, etc.) will need to support enhanced detection, reporting, and response workflows to satisfy new AI-related auditing and regulatory compliance requirements, and assuage concerns regarding the organization’s asset security.

AI also highlights the significance of certain aspects of software that were arguably not as pronounced in the not-so-distant past, such as provenance. SCA tools enable organizations to establish an understanding of the software components within an application, their licensing details, and reported security vulnerabilities. SCA already fulfills a pivotal role in application security and software supply chain security, and its importance is likely to become even more pronounced with the advent of AI-powered software. However, coping with AI-related security challenges requires security solutions such as SCA to extend their purview beyond software components to account for connected/dependent services as well, due to their possible exploitation by malicious actors using AI technology. The potentially dynamic nature of inter-service dependencies has significant implications on the software bill of material (SBOM), which is critical to software supply chain security.

Can regulation alone assuage AI-related security concerns?

Regulations are often regarded as a roadblock rather than a motivating stimulus. However, given the unprecedented and still untapped power of AI, regulation is imperative to ensure continued AI evolution without disastrous consequences to critical data, systems, services and infrastructure. Without adequate regulation, it may not be long before it becomes extremely difficult, if not impossible, for organizations to maintain agile software delivery while successfully coping with AI-related issues.

As I’ve noted in my previous blog post, it is important to implement security-for AI, including AI-related restrictions. Granted, it is neither trivial to establish them, nor determine how they should apply to AI technology, its provider, or the organization that leverages such technologies. Nevertheless, to reap the benefits of AI without sacrificing application security, it’s crucial to consider viable approaches to obviate, or at least restrict the damage AI technology can cause. Coping with the potential risks stemming from unbridled use of AI technologies may warrant a radically new approach to application security tooling, processes and practices.

That is not to say that implementing AI-related regulations and guidelines would be easy, or merely an extension of what is currently in place. In fact, it is questionable whether regulation alone is sufficient to help organizations address some of the challenges posed by AI. The industry already struggles to establish whether a given piece of software features code that was generated using AI. It may also be challenging to predict the expected outcome of AI usage, or comfortably establish whether such a usage results in the expected behavior. There are numerous aspects that regulation may be less likely to address, but it just might be able to set an important security bar for critical systems, which is subject to regulatory compliance and may impose restrictions that can obviate or mitigate security pitfalls more likely to emerge otherwise.

As opposed to regulations that often take a while to gain traction or acceptance, AI has been exhibiting an incredibly rapid evolution during the past couple of years. Keeping pace with technology is de rigueur for technology organizations in the contemporary business world, but there is arguably no precedent to the sky-rocketing interest evidenced lately for AI technology. Nevertheless, AI’s risks demand that responsible steps be taken to avoid serious security consequences. Governments are already devising AI-related cybersecurity strategies as they acknowledge these risks and threats that can emerge from AI’s rapid technological evolution. In short, regulation should not be treated as a “nice to have” option. It’s a “must-have.”

Getting ready for a new AI-powered application security world

AI may represent the most powerful opportunity ever to enable developers to produce software code at unprecedented speed and efficiency, but such power has security repercussions that must be taken into account. The remarkable rise of AI technology has unfortunately not been matched by application security readiness, and it might take time before AI-powered solutions will be less susceptible to AI-related risks. With AI increasingly gaining a stronger foothold in software development, it has become crucial to expedite regulation efforts.

It is also important to note the pronounced effect AI has on open source software, which raises serious questions concerning the implications of future open-source software. While arguably shattering various assumptions associated with open source software development such as authorship and licensing classification, etc.), AI could ultimately encourage increased collaboration and software sharing, fertile ground for open source software.

We are witnessing the inception of AI-powered application development. As the industry anticipates the extent to which AI will change the landscape in which application security operates, we must not be complacent about our ability to predict its outcomes. It would be irresponsible to underestimate the pace and magnitude of AI’s impact, and it’s a challenge that we must be ready to tackle.