Sometimes A Vulnerability Isn’t So Vulnerable

Table of Contents

As AppSec professionals, we are keenly aware of how bad it can be out there when it comes to keeping software products secure. There are some really bad offenders, and even worse are the ostriches who keep their heads in the sand afraid of what they might find if they open the can of worms that is their open source component usage.

Many developers and their organizations know that if they take a hard look at their open source usage, they may find that they are using open source components with high-level vulnerabilities that will then require developers to go in and spend hours researching and remediating. Nothing is more frustrating than having to go into your code to realize that crucial elements of your product were all built on a component that could give attackers remote execution controls into your software, forcing you to rework your code with something more secure.

Software Composition Analysis tools have come a long way in recent years to help us keep from adding open source components with known vulnerabilities into our products in the first place. Automation and continuous monitoring have been fantastic at telling us what we have in our product, identifying components with high levels of accuracy and alerting us if there is a known vulnerability associated with the component.

However, even as these tools have gotten pretty good at telling us what we are using, they have not yet been able to tell us how we are using them. Until this year.

Effective vs ineffective: Do all vulnerabilities impact the security of our products?

When we receive an alert that an open source component has a known vulnerability associated with it, we are only getting a part of the story.

To be sure, this open source component does, in fact, have a known vulnerability but it might not actually be putting our product at risk. What matters here is whether or not the vulnerable functionality is receiving calls from our proprietary product.

If so, then we deem it to be effective. If not, then it is ineffective.

While an ineffective vulnerability is still an issue that will need to be addressed, it does not directly threaten your product and we can think about it as a lower priority risk.

In order to determine which open source components were effective, we needed to run an effective usage analysis scan on the project, mapping out what was connected to our proprietary product and what was not.

According to our research into over two thousand vulnerable Java components, only 30% were found to be effective. Conversely, this means that 70% were ineffective, and posed no security risk to our product. The implications for developers dealing with the question of how to prioritize the mountain of alerts that they are being buried under are staggering, as it means that at least 70% of legitimate alerts do not need their immediate attention.

In hopes that these findings would hold up once they were out of the lab and in more realistic conditions, we decided to use our developers as guinea pigs and try some of our own medicine before bringing it to the public.

Meet our test bunnies

Despite their initial suspicions, our test subjects, I mean talented developers, were pleasantly surprised by what they saw.

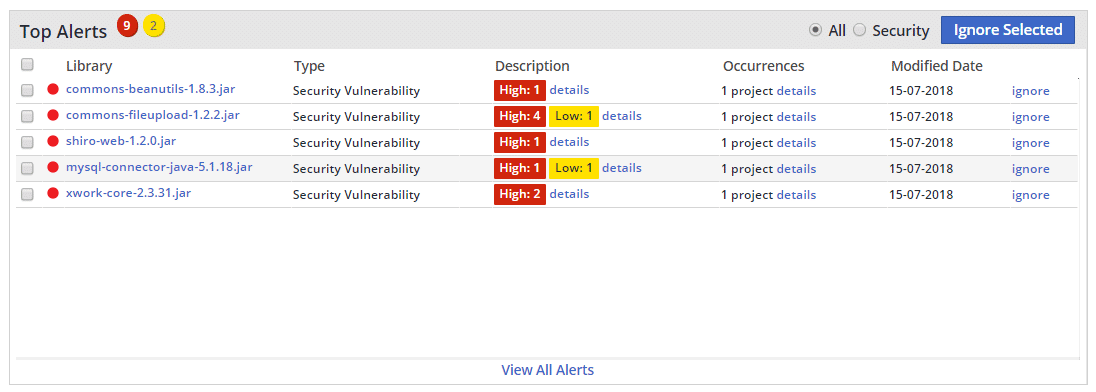

For years our team has been used to looking at our products on their Mend dashboard, seeing which projects were found to have known vulnerabilities, and receiving important information like suggested fixes to help make their remediations faster by saving them the time on having to research their next steps by themselves.

What they lacked, however, was an easy way to prioritize their remediations other than perhaps which ones had the most severe CVSS ratings. While taking out the gnarliest vulnerabilities first may seem to make sense, this wasn’t necessarily the most impactful since they had no way of actually knowing if those vulnerabilities were effective.

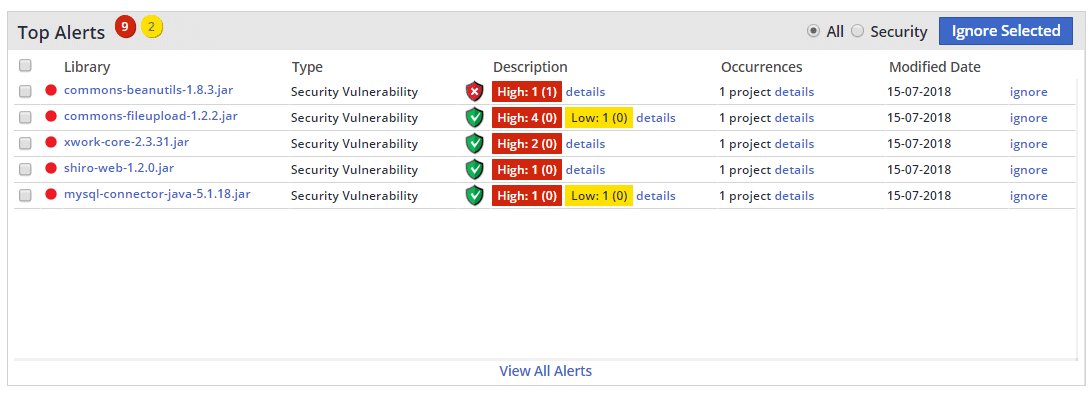

Once they began the effective usage analysis on their projects, our developers had a Matrix-like revelation, seeing their mountain of alerts turn into an orderly collection that was ready to be tackled.

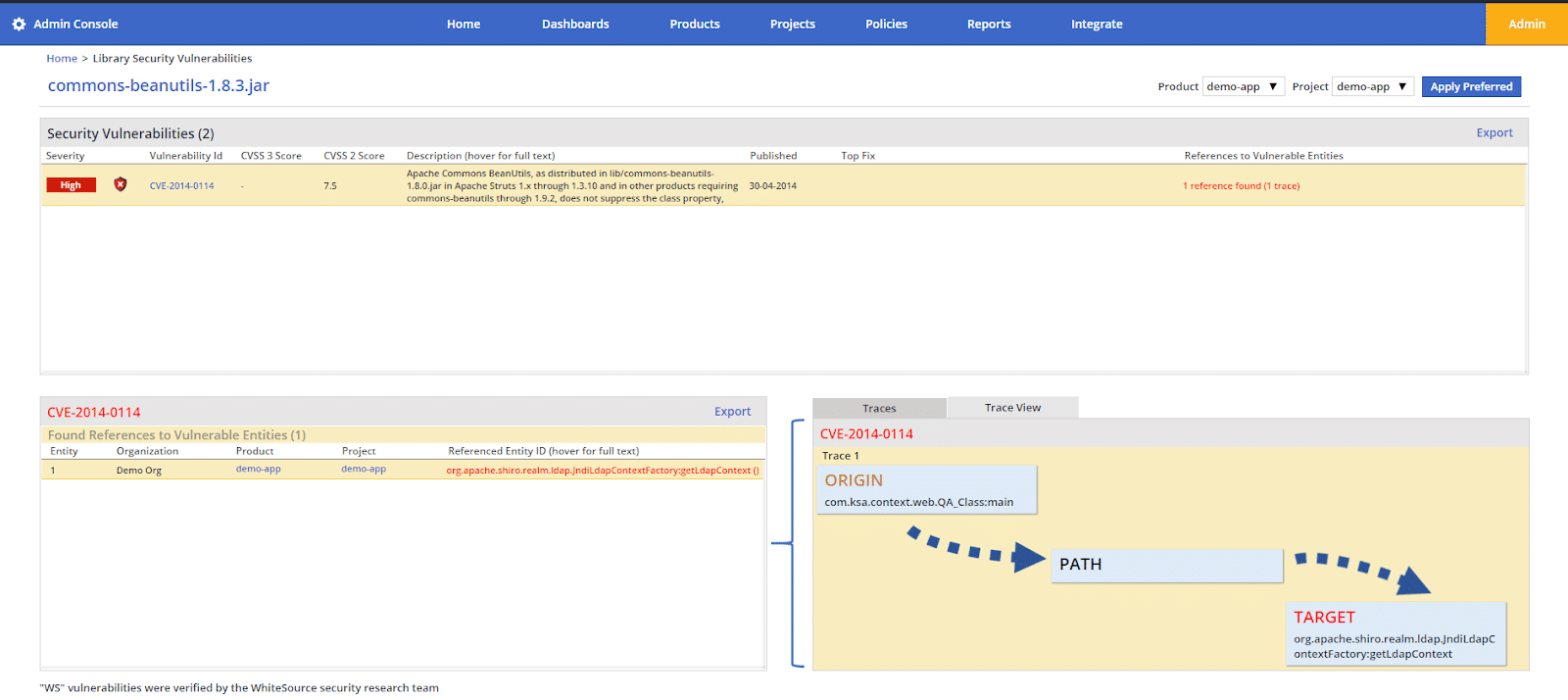

Upon the new review, our developers could understand at a glance whether or not a vulnerable component was effective. If a component had a red shield, it meant that it got sorted to the top of the pile. A green shield meant that it got pushed down, not forgotten, but not a cause for skipping lunch either.

When a vulnerable open source component was deemed as a high priority remediation, one of the new features that pleasantly surprised our team was the trace analysis. This trace showed the developer not only where exactly the vulnerable component was in their code, down to the line in fact, but also acted a proof that they weren’t off on a wild goose chase.

It was also valuable to them in that it showed them all the other parts of their code which were dependent on the vulnerable functionality, helping them to minimize unexpected issues later post-remediation when they implemented the suggested fix.

Long-term implications for productivity

Since implementing effective usage analysis as a part of our open source security process, Mend has succeeded in standardizing prioritization within our organization, based on an objective understanding of whether a vulnerable component is effective.

Our developers still don’t like having to deal with remediations — because after all, nobody got into programming to redo our work later — but now are spending less time overall on these operations from start to finish, knowing that their efforts are worth the time.

Now when a vulnerability pops up on their dashboard with a green shield next to it, our developers can legitimately say that it is not such a huge deal and that it’ll be alright to get to it tomorrow.