The New Era of AI-Powered Application Security. Part One: AI-Powered Application Security: Evolution or Revolution?

Table of Contents

This is the first part of a three-part blog series on AI-powered application security.

This part presents concerns associated with AI technology that challenge traditional application security tools and processes. Part Two covers considerations about AI security vulnerabilities and AI risk. Part Three covers suggested approaches to cope with AI challenges.

Imagine the following scenario. A developer is alerted by an AI-powered application security testing solution about a severe security vulnerability in the most recent code version. Without concern, the developer opens a special application view that highlights the vulnerable code section alongside a display of an AI-based code fix recommendation, with a clear explanation of the corresponding code changes. ‘Next time’, the developer ponders after committing the recommended fix, ‘I’ll do away with the option to review the suggested AI fix and opt for this automatic AI fix option offered by the solution.’

Now imagine another scenario. A developer team is notified that a runtime security scan detected a high severity software vulnerability in a critical application that is suspected to have been exploited by a malicious actor. On further investigation, the vulnerable code is found to be featured in a code fix recommended by the organization’s AI-powered application security testing tool.

Two scenarios. Two different outcomes. One underlying technology whose enormous potential for software development is only matched by its potential for disastrous security consequences.

Development of secure software has never been an easy task. As organizations struggle to accommodate highly demanding software release schedules, effective application security often presents daunting challenges to R&D and DevOps teams, not least due to the ever-increasing number of software security vulnerabilities needing inspection. AI has been garnering attention from technology enthusiasts, pundits, and engineers for decades. Nonetheless, the mesmerizing capabilities demonstrated by generative AI technology in late 2022 has piqued an unprecedented public interest in practical AI-powered application use cases. Perhaps for the first time, these capabilities have compelled numerous organizations to seriously explore how AI technology may help them overcome pressing application security challenges. Ironically, the same AI capabilities have also raised growing concerns about security risks that AI might pose to application security.

The advent of advanced and accessible AI technology therefore begs an interesting question. Are traditional application security tools, processes, and practices sufficient to cope with challenges posed by AI, or does AI call for a radically different approach to application security?

Lots of opportunities

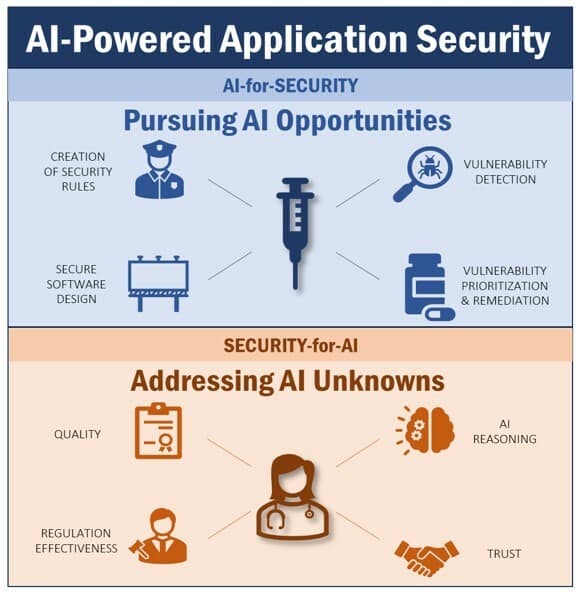

AI has the potential to elevate the value of application security thanks to an unprecedented combination of power and accessibility, which can augment, facilitate, and automate related security processes and significantly reduce the effort they often entail. It is helpful to distinguish between two cardinal AI-related application security angles: AI-for-Security (use of AI technologies to improve application security), and Security-for-AI (use of application security technologies and processes to address specific security risks attributed to AI). For example, taking an AI-for-Security perspective, AI can be used to:

- Automate the establishment of rules for application security policies, security-related approval workflows, and alert notifications

- Offer suggestions for software design that may dramatically accelerate the development of secure software

- Support effective and efficient (i.e., low noise) detection of software security vulnerabilities

- Streamline prioritization of the detected vulnerabilities

- Propose helpful advice for remediating such vulnerabilities, if not supporting fully-automated remediation altogether

The list of potential AI benefits is truly staggering.

The capabilities of recent AI advancements are particularly likely to appeal to agile organizations in need for accelerated software delivery. To remain competitive, such organizations strive to maximize the pace of software delivery, potentially producing hundreds or even thousands of releases every day. Application security tools such as security testing solutions are heavily used by organizations to govern the detection, prioritization, and remediation of security vulnerabilities. Unfortunately, traditional solutions may struggle to support fast software delivery objectives due to the impact of wrongly detected vulnerabilities and manual remediation overhead, which can result in inefficient vulnerability handling. AI-powered application security tools have the potential to reduce reported vulnerability noise and accelerate vulnerability remediation dramatically. This creates a strong temptation for development and security teams to explore and embrace AI-powered tools.

Lots of unknowns

A key factor that challenges the acceptance of AI-powered application security is trust in the technology — or lack thereof. Currently, there are many unknowns concerning the application security risks of AI. In contrast with non-AI software, AI ‘learns’ from the huge amounts of data it is exposed to. Consequently, AI may not necessarily conform to the logic and rules that non-AI software is explicitly coded to follow. Furthermore, due to AI’s learning process it may be impossible to anticipate whether explicit rules would yield better AI predictability in certain application security scenarios (e.g., correct remediation suggestion for a vulnerability case detected during security testing).

Crucially, it might be unfeasible to comfortably determine how AI ‘reasons’, which in turn makes it difficult to establish if, when, and why it falters. This contrasts with traditional, non-AI software logic that follows explicit instructions designed to produce a predictable output, making it easier to identify deviations from an expected outcome. Statistical observations aside, it may also be challenging to assess how well AI models ‘behave’, and to accurately establish adverse impact resulting from such behavior. To make matters worse, many people tend to accept what AI produces as ‘good enough’ rather than being more discerning and vigilant about such an output. This is especially of concern since the quality and accuracy of AI responses to user requests are sensitive to the data used for learning. Insufficient, partial or flawed data might not be acknowledged by AI as such, potentially leading to wrong or faulty responses that have a detrimental effect on application security.

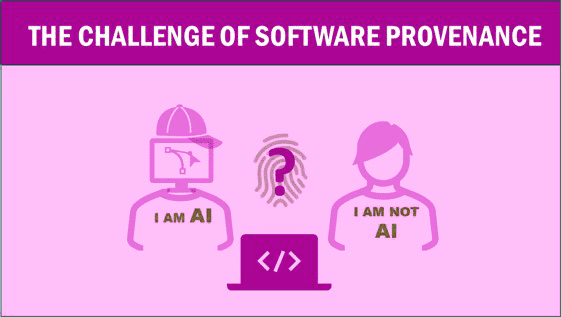

Another critical aspect concerns establishment of code provenance, its origin and authorship. Ascertaining provenance can enable users to expedite remediation of vulnerable application code, and facilitate effective incident response by helping an organization to infer the party likely to be responsible for code maintenance and vulnerability fixes.

Sadly, it might not be possible in many cases to glean such information for applications that were produced (or altered) by an AI tool. This complicates both attribution of liability and vulnerability remediation. Moreover, AI will likely give rise to new types of vulnerabilities, further challenging the organization’s ability to detect and handle them without hampering development agility and rapid application delivery. Many companies are already struggling to cope with a deluge of detected application security vulnerabilities. While it is plausible that AI-powered security tools may help organizations reduce the count of software security vulnerabilities, usage of AI by malicious actors presents a countervailing effect, which might significantly exacerbate the organization’s challenges.

While AI may present a compelling value proposition for security vulnerability detection and remediation, it also signifies a big unknown regarding the organization’s attack surface. It is conceivable that some organizations will be so focused on gaining a competitive edge with AI that they will inadvertently overlook its potentially negative impact –– or will not acknowledge the need to seriously factor such an impact, let alone its ramifications. Such an approach would make it harder for the organization to anticipate threats and implement appropriate incident response measures, which can put at risk the organization’s overall security posture and threat responsiveness. It is therefore important to place a strong focus on security-forAI measures, guidelines, and boundaries, and consider proper regulation to protect organizations from AI-related cyberattacks.

A defining moment for software development

The advent of AI represents a defining moment for software development and affects how we develop, use, and interact with software. The phrase “game-changing” may be often used unjustifiably, but in the case of AI technology, it is spot on. AI ushers in a new paradigm for software development, but it additionally raises security challenges that make AI an appealing target for malicious actors. In the next blog post, I will review aspects concerning AI security vulnerabilities and AI risk that call for consideration of a new approach to cope with AI challenges.

Read the next post in this series.