The New Era of AI-Powered Application Security. Part Two: AI Security Vulnerability and Risk

This is the second part of a three-part blog series on AI-powered application security.

Part One presented concerns associated with AI technology that challenge traditional application security tools and processes. This part covers aspects concerning AI security vulnerabilities and AI risk. Part Three covers suggested approaches to cope with AI challenges.

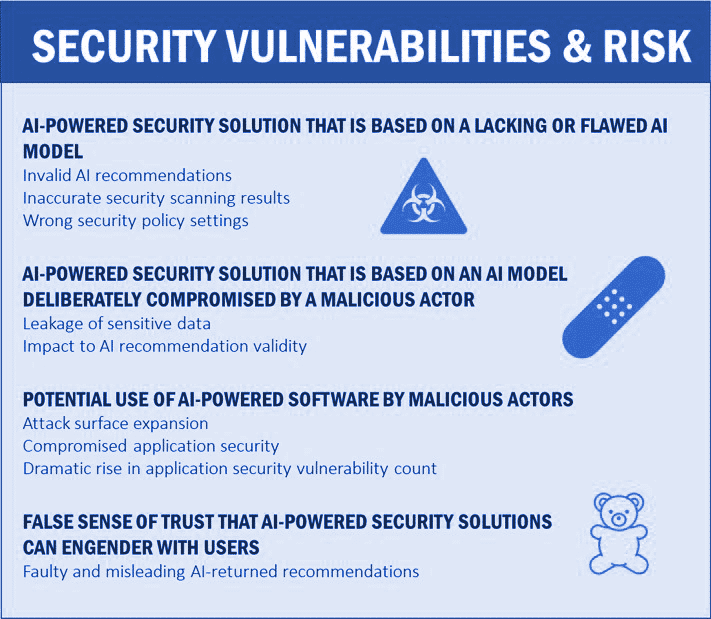

AI-related security risk manifests itself in more than one way. It can, for example, result from the usage of an AI-powered security solution that is based on an AI model that is either lacking in some way, or was deliberately compromised by a malicious actor. It can also result from usage of AI technology by a malicious actor to facilitate creation and exploitation of vulnerabilities.

AI-powered solutions are potentially vulnerable at the AI model level. Partial, biased, or accidentally compromised model data might adversely affect the validity of AI-powered application security recommendations. This might produce unwanted outcomes such as inaccurate security scanning results and invalid security policy settings. Model data might be deliberately compromised by malicious actors, thereby raising risk. Notably, many types of security vulnerability often evidenced with non-AI software environments (e.g., injection, data leakage, unauthorized access) are applicable to AI models too. There are of course vulnerabilities that are unique to AI or AI models.

Another cardinal AI-related security risk stems from the potential use of AI-powered software by malicious actors, which enables them to discover and exploit application software vulnerabilities at a scale and speed that dramatically raises the potential security risk impact, and can significantly expand the organization’s attack surface. One example concerns exploitation of vulnerabilities associated with business-related processes, which may result from lacking enforcement of proper security rules for inter-service requests at the transaction level. Common software security vulnerabilities are typically confirmed by either analyzing software code, or assessing the software’s runtime behavior under real or crafted workloads. However, situations may arise where a risk emerges only under conditions depending on the state of multiple independent components, which may complicate its detection by traditional security solutions. AI-powered solutions can help organizations detect such a vulnerability, but AI technology can also be employed by malicious actors to exploit it.

Without means to properly safeguard AI models against the exploitation of vulnerabilities , using AI-powered solutions to detect and remediate vulnerabilities might lead to severe security hazards, such as remediation suggestions that feature maliciously embedded code, which may be challenging to detect and mitigate.

There is an additional AI-related security consideration that I mentioned in my previous blog post — trust, or in this case, the false sense of trust that AI-powered security solutions can create. It is remarkably easy for users to put together textual requests (prompts) for AI security-related advice or actions. This can be deceiving, though. Many developers, especially those lacking application security expertise, may not necessarily possess the knowledge to articulate their intended security requests in an accurate and complete manner. While being sufficiently capable to produce a plausible response in many use cases, AI may not be able to invariably compensate for some ill-defined security prompts, resulting in recommendations that may not fully address the user’s need.

How should we cope with AI-related concerns and its perceived risk?

Reaping the benefits of AI requires new levels of vigilance to effectively address the security risks associated with the technology. The evolution of practical AI-powered application security may have just started, but we must already try to understand AI’s potential challenges and create appropriate security requirements and measures. In my next blog post, I’ll elaborate on them.